RDFeasy: scalable RDF publishing in the AWS cloud

"Now that was easy!"

Background

One of my favorite Linked Data products was Kasabi, championed by Leigh Dodds. Kasabi was a platform that let people upload RDF files to a web site and publish them in a SPARQL 1.1 endpoint. Free data could be published for free, and there were plans to let people sell access to data to others.

It was a nice idea and a beautiful implementation but it didn't work in the marketplace. Thankfully the data was in a standard format, so it is still downloadable.

Myself, I was interested in working with data sets such as Freebase and DBpedia and it wasn't so easy to use Kasabi for data sets in the 50 million to 1 billion

triple range. The folks there helped me get the Ookaboo dataset online but soon after they went out of business.

API Economics

Value, price and cost are different things, and there are different definitions of profit. A requirement for sustainability, however, is that operating costs must be met in the long term.

A public SPARQL endpoint might be run by an academic group out of a research grant or by a triple store vendor that wants to show off its wares. Either way, there isn't a direct link between the cost of running the store and value created; it's predictable that more use will lead to higher costs, but not so predictable that the organization can spend enough on hardware to keep it going.

It's particularly problematic, for a free or paid service, that people use services differently. Some people might ask for a triple at a time (inefficient in a particular way) while others will ask, deliberately or accidentally, queries that could take minutes or even years to run. Even if you devised a pricing scheme that worked, there's still the difficult technical problem of keeping the activity of one user from affecting the performance of others.

Value per bit

One answer to the question is to charge a lot of money for the service. Salesforce.com does this. The enterprise level of the Sales Cloud costs $125 a month per user, a fraction of what a salesman costs. A fair sized customer could have 1000 salespeople and a database of 10 million entities, so it is no miracle to support this much data with $125,000 a month in cashflow. They host it all on gold plated hardware from Sun, running on an Oracle database and deal with customer interactions by building a system that is many, many times larger than any individual customer needs.

This is nice work if you can get it.

For many applications, particularly "big data" applications, the user doesn't get anywhere near the value per bit of a CRM system. Therefore, the rest of us need a cloud of a different kind. (The last time I went to Dreamforce, I was told that a single copy of the Salesforce.com system filled up less than a cage in data center)

Low cost RDF publishing in the cloud.

I got the idea for RDFeasy after I'd done an economic analysis of the Map/Reduce pipeline that produces :BaseKB. With the method of analysis becoming habitual it was clear that the cost of producing a triple store distribution of 770 million triples would be just a few dollars, less than the cost of running the Map/Reduce pipeline. Once the database is imaged, storing the AMI would cost about $5 a month. You could boot an image from the AMI and be using the database 10 minutes later at an cost of under 50 cents an hour.

In this model, end users pay for a bundle of hardware, software and data and can buy as many instances as they need to suit their needs. In fact, if you point Elastic Load Balancer at the AMI, you could handle an arbitrary query rate.

Economically, the publisher has predictable and fixed costs to provide a product, because it doesn't need to host a server. Consumers, on the other hand, can buy capacity as they need it, with no barrier to entry.

Two key advances have been the introduction of SSD cloud instances and the performance improvements introduced in OpenLink Virtuoso 7. SSD cloud instances are important, not just because they are fast, but that because they are fast at random access, they defeat the Virtual machine I/O Blender so we get predictable results.

Devops Revolution

Now it's a bit silly to talk about a $3 compute job when even the slightest amount of babysitting by a human costs a lot more than that.

Automation, and the accompanying standardization, is the third key idea behind RDFeasy. By using a controlled hardware and software combination, we get consistent results. With consistent results it is possible to build a checklist with a specific set of steps to load data into the system. RDFeasy does not currently

automate events that occur outside the virtual machine, but rather it provides something like an API that accomplishes all of the tasks which can be done on the virtual machine.

Wider systems

This low-cost technology can fit into wider systems in a different way.

Because RDFeasy meets the requirements for the AWS Marketplace, we can publish products such as RDFEasy :BaseKB Gold Compact Edition where users can deploy them with a single click.

With a high level of automation, products based on changing data sources such as DBpedia Live and Freebase can be created and delivered in less than 24 hours.

Some data publishers might not want to hand over complete data sets to end users, but the same benefits can be had, with a higher level of control, by using the packaging technology to deploy products inside the publisher's system.

RDFeasy is open source software, so you can discover your own ways to use it.

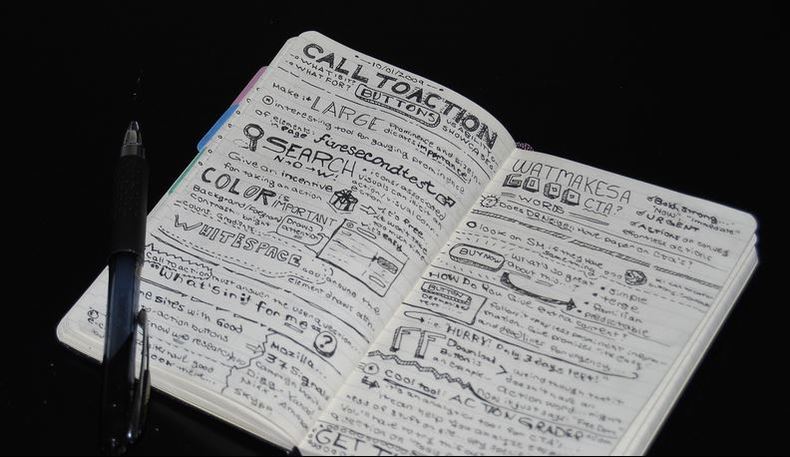

Call to action

RDFEasy :BaseKB Gold Compact Edition is the first RDFeasy product; a product of the Infovore toolkit, :BaseKB is a purified extract of the Freebase RDF that is compatible with RDF standard tools. Together with OpenLink Virtuoso 7 and an AWS instance type chosen for economical performance, this product can have you working with high quality Freebase data in just minutes.

RDFeasy is supported on the Infovore and :BaseKB mailing list. Join now to keep in touch with developments.

I've been involved with commercial and academic e-publishing for a long time and I've witnessed how conventional funding models are great for starting things but not so good for finishing them or keeping them going. I'm doing a fundraiser on Gittip which I think is one of the most cost-effective contributions you can make to make Linked Data workable.

Bee image CC-BY-ND thanks to Johan J.Ingles-Le Nobel. Gas meter by Leo Reynolds. Scale image by darkbuffet. Cloud panorama by Melinda Swinford. Milling machine by Heartland Enterprises. Kite photo of resort by Pierre Lesage.

Creator of database animals and bayesian brains